Potential ingredient for alien life found on Titan

A molecule that could help build otherworldly life is present on Saturn’s moon Titan, researchers have discovered.

Vinyl cyanide, a compound predicted to form membranelike structures, is created in Titan’s upper atmosphere, scientists report July 28 in Science Advances. There’s enough vinyl cyanide (C2H3CN) in the moon’s liquid methane seas to make about 10 million cell-like balls per cubic centimeter of ocean, researchers calculate. On Earth, about a million bacteria are found in a cubic centimeter of ocean water near shore.

“It’s very positive news for putative-Titan-life studies,” says Jonathan Lunine, a planetary scientist at Cornell University who was not involved in the new study.

Titan has no water, usually considered a prerequisite for life. Instead of water, freezing-cold Titan has liquid methane. There’s even a methane cycle that mimics Earth’s water cycle (SN: 3/21/15, p. 32). But Titan is so cold — usually about –178° Celsius — that the smallest unit of life on Earth, the cell, would shatter in the moon’s subzero seas.

In 2015, Lunine and Cornell colleagues James Stevenson and Paulette Clancy proposed a way life might exist in methane. Computer simulations predicted that vinyl cyanide (also called acrylonitrile or propenenitrile) could make flexible bubbles called azotosomes that would be stable in liquid methane (SN: 4/30/16, p. 28). Those bubbles might act much as cell membranes do on Earth, sheltering genetic material and concentrating biochemical reactions needed for life.

When the Cornell researchers suggested the presence of azotosomes on Titan, carbon, hydrogen and nitrogen had already been detected in abundance in the moon’s atmosphere. But no one knew whether those atoms joined to make vinyl cyanide there. The Saturn probe Cassini had detected a molecule of the right mass to be vinyl cyanide, but couldn’t definitively identify the molecule’s chemical makeup.

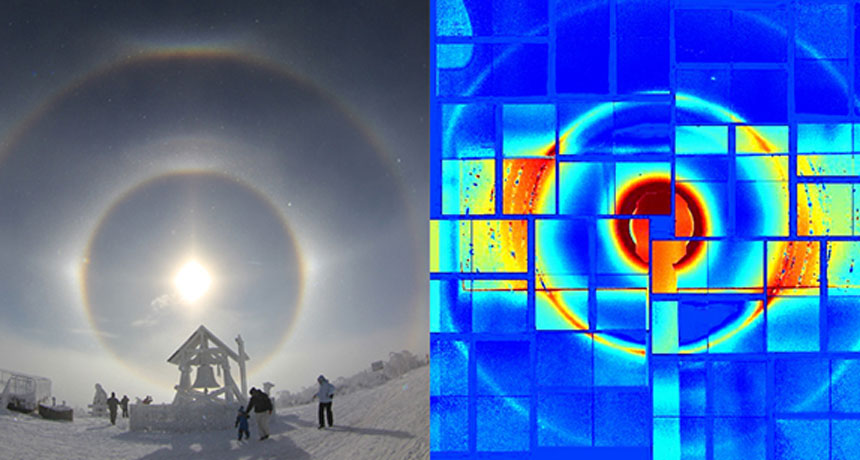

But evidence for the chemical compound was buried in archived data from a large radio telescope, Maureen Palmer of Catholic University of America in Washington, D.C., and colleagues discovered. Palmer, an astrochemistry and astrobiology researcher, combed data collected by the Atacama Large Millimeter/submillimeter Array, or ALMA, in Chile between February 22 and May 27, 2014.

Astronomers point ALMA at Titan to calibrate the telescope because the moon has known brightness levels, says Palmer, who also works at NASA’s Goddard Space Flight Center in Greenbelt, Md. The team used that calibration data to detect the signature of vinyl cyanide at specific wavelengths of light and calculate its abundance.

“This is a pretty secure detection,” says Ralph Lorenz, a planetary scientist at the Johns Hopkins University Applied Physics Lab in Laurel, Md.

Even with confirmation of vinyl cyanide, researchers can’t say that azotosomes form on Titan. That’s probably not something telescopes can determine, Lunine says. A probe would need to sample Titan’s seas to detect the structures.

And even detecting azotosomes would not mean there’s life on Titan, says Lorenz. The moon’s extreme cold may hamper metabolism. What’s more, no one knows whether liquid methane can take the place of water for supporting life, he says. “If I were a betting man, I’d say Titan does not have life.”