Cause of mass starfish die-offs is still a mystery

In the summer of 2013, an epidemic began sweeping through the intertidal zone off the west coast of North America. The victims were several species of sea star, including Pisaster ochraceus, a species that comes in orange and purple variants. (It’s also notable because it’s the starfish that provided ecology with the fundamental concept of a keystone species.) Affected individuals appeared to “melt,” losing grip with the rocks to which they were attached — and then losing their arms. This sea star wasting disease, as it is known, soon killed sea stars from Baja California to Alaska.

This wasn’t the first outbreak of sea star wasting disease. A 1978 outbreak in the Gulf of California, for instance, killed so many Heliaster kubinjiisun stars that the once ubiquitous species is now incredibly rare.

These past incidents, though, happened fast and within smaller regions, so scientists had struggled to figure out what had happened. With the latest outbreak happening over such a large — and well-studied — region and period of time, marine biologists have been able to gather more data on the disease than ever before. And they’re getting closer to figuring out just what happened in this latest incident.

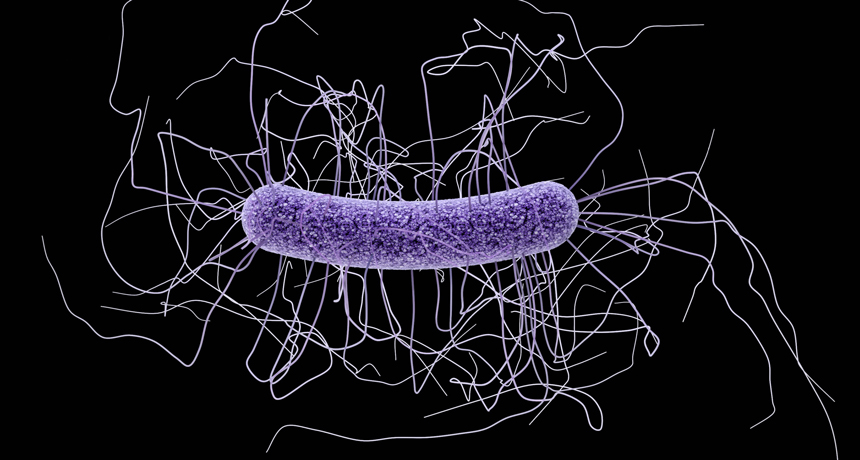

One likely factor is the sea star-associated densovirus, which, in 2014, scientists reported finding in greater abundance in starfish with sea star wasting disease than in healthy sea stars. But the virus can’t be the only cause of the disease; it’s found in both healthy and sick sea stars, and it has been around since at least 1942, the earliest year it has been found in museum specimens. So there must be some other factor at play.

Earlier this year, scientists studying the outbreak in Washington state reported in the Proceedings of the Royal Society B thatwarm waters may increase disease progression and rates of death. Studies of California starfish came to a similar conclusion. But a new study, appearing May 4 in PLOS One , finds that may not be true for sea stars in Oregon. Bruce Menge and colleagues at Oregon State University took advantage of their long-term study of Oregon starfish to evaluate what happened to sea stars during the recent epidemic and found that wasting disease increased with cooler , not warmer, temperatures. “Given conflicting results on the role of temperature as a trigger of [sea star wasting disease], it seems most likely that multiple factors interacted in complex ways to cause the outbreak,” they conclude.

What those factors are, though, is still a mystery.

Also unclear is what long-term effects this outbreak will have on Pacific intertidal communities.

In the 1960s, Robert Paine of the University of Washington performed what is now considered a classic experiment. For years, he removed starfish from one area of rock in Makah Bay at the northwestern tip of Washington and left another bit of rock alone as a control. Without the starfish to prey on them, mussels were able to take over. The sea stars, Paine concluded, were a “keystone species” that kept the local food web in control.

If sea star wasting disease has similar effects on the Pacific intertidal food web, Menge and his colleagues write, “it would result in losses or large reductions of many species of macrophytes, anemones, limpets, chitons, sea urchins and other organisms from the low intertidal zone.”

What happens, the group says, may depend on how quickly the disease disappears from the region and how many young sea stars can grow up and start munching on mussels.